Building towards a Flexible Dynamic Autoscaling Architecture with Ray / EKSRay - Part 1

In today’s data-centric landscape, organizations increasingly depend on processing vast datasets to extract actionable insights and maintain a competitive edge. However, as data volumes grow, traditional data processing platforms (such as Spark) often face challenges including increased memory overhead, complex cluster configurations, and escalating platform costs, especially when scaling from small-scale tests to full production workloads. For CMT, where we work with millions of miles of multi-modal vehicular sensor-data, this was a significant limiting factor.

To address these limitations, our Data Science teams at CMT explored alternative solutions. We eventually adopted Ray—a distributed computing framework designed for high-performance tasks—as a highly customizable parallel computing framework built with a Python-first approach. Ray’s architecture facilitates effortless elasticity and supports large-scale data experiments more affordably.

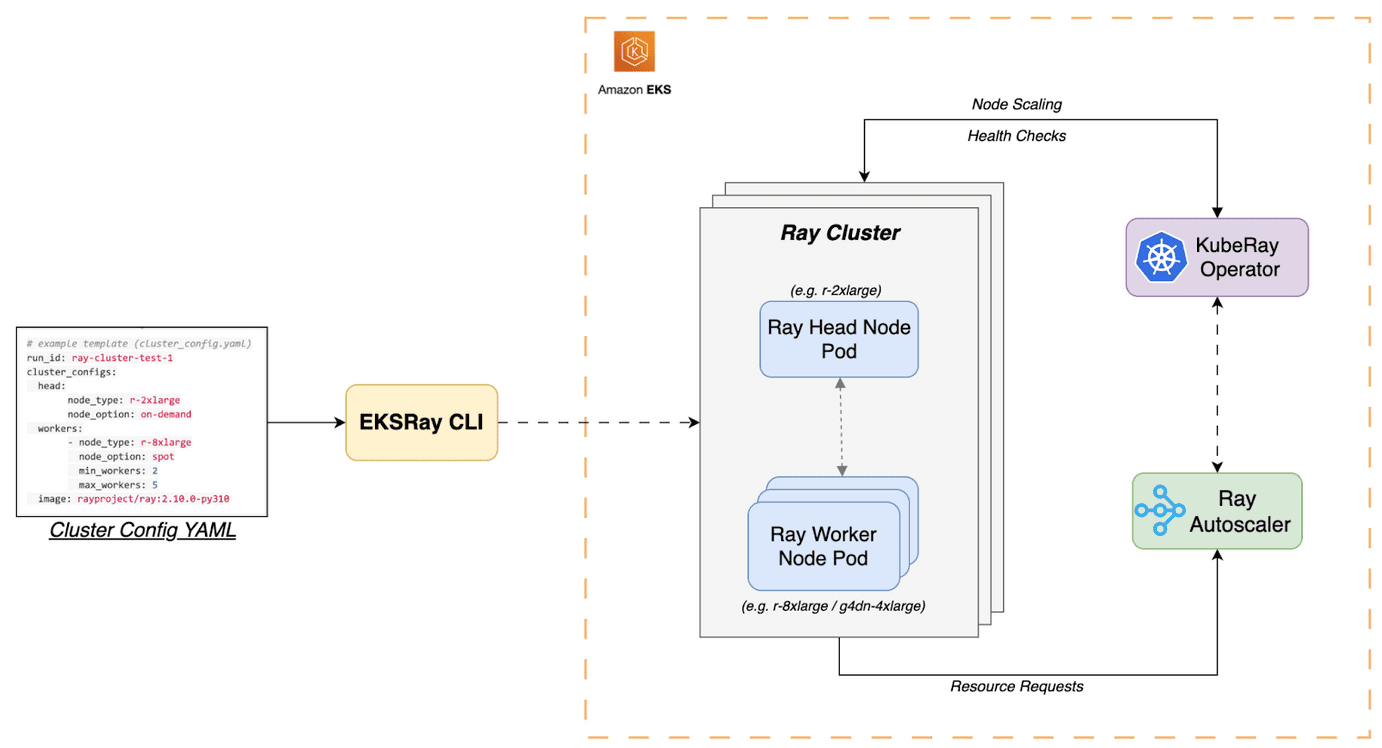

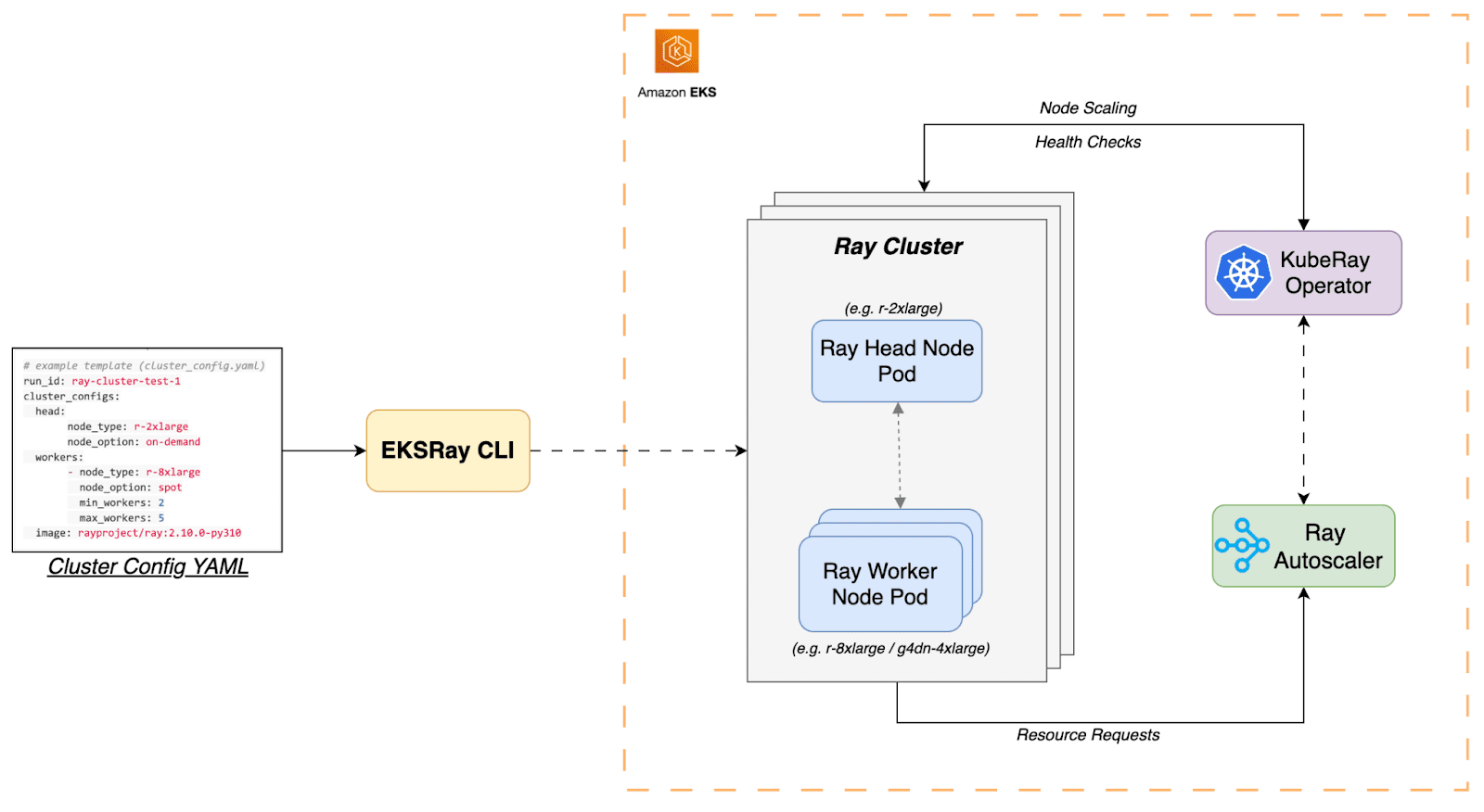

To harness Ray’s potential, we developed EKSRay, an in-house data science platform that integrates Ray’s parallel computing capabilities with Kubernetes – a flexible and open-source container orchestration platform. In our previous blog post “Scaling Deep Learning Workflows with EKSRay”, we introduced the Ray architecture and its benefits for distributed tasks. In this article, we delve deeper into the specifics of EKSRay as a platform, explain its various components, and describe its advantages. At CMT, EKSRay provides us with a unified platform that not only curbs cloud expenses but also simplifies infrastructure management, even as data processing demands scale to substantial levels.

EKSRay – A Closer Look

EKSRay at CMT is an in-house Data Science computing platform built on a combination of well-established and actively evolving technologies:

- Amazon EKS (Elastic Kubernetes Service)

- KubeRay (the Ray team’s Kubernetes Plugin)

- The EKSRay CLI-tool

Amazon EKS – Our SRE team manages the Amazon EKS side of the infrastructure entirely with Terraform. EKS provides a fully managed service that enables us to run Kubernetes seamlessly within our existing AWS ecosystem. This automates cluster provisioning and maintenance, and offers straightforward container scheduling, resource scaling, compute optimization, and data storage at scale—while integrating smoothly with the rest of our AWS infrastructure.

KubeRay – KubeRay is a powerful open-source Kubernetes operator that simplifies the deployment and management of Ray applications in Kubernetes (a containerized-application management system). It acts as the main connector between our EKS-based infrastructure and Ray-based applications. By defining CRDs (Custom Resource Definitions), KubeRay enables users to specify high-level compute requirements that translate into the lower-level details needed to spin up RayClusters and submit RayJobs. KubeRay (and Ray itself) is actively developed by the Ray team and contributors at Google/GKE.

The EKSRay CLI-tool bridges the end user to our EKS-based Ray platform. It handles everything from provisioning user-specific EKSRay environments to spawning RayClusters and jobs, monitoring clusters, visualizing dashboards, launching Jupyter servers or VSCode environments, managing cluster credentials, and interfacing with Kubernetes via kubectl. Created by the Data Science department at CMT and maintained by MLOps, it has become a developer-friendly tool in recent releases, offering:

- Fully integrated Jupyter environments & direct-VSCode Integration

- Easily configurable (and more flexible) RayCluster spawning

- A new “RayJob” system for job-level automation and metrics tracking

It has been exciting to see this platform evolve, not only from an end-user perspective—where new features and conveniences keep coming—but also from the inside out. This includes improvements to open-source tools, automation of EKS environments, cross-department collaboration at CMT, and standardized frameworks for EKSRay. Our goal is to continue enhancing the capabilities of EKSRay as a flexible, cost-effective, low-maintenance, and highly efficient computing platform for Data Science and development at CMT.

What can EKSRay do?

In large-scale data science projects, a typical development pipeline might involve:

- Interfacing with database services such as RDS and Redshift or data lake services like S3

- Performing ETL processes for data assimilation and refinement

- Training models with support for distributed computing at scale

- Running model inference and generating metrics

- Handling post-processing, tracking, and publishing tasks

It’s crucial to ensure seamless integration across all these steps to minimize friction for data scientists. Equally important is the ability to transition from small-scale prototypes (with sandbox datasets) to large-scale production workloads without introducing unnecessary complexity for end users. EKSRay was designed with this in mind—making it straightforward to prototype and then scale up as needed.

With this goal at the forefront, EKSRay offers two primary modes of operation for end users:

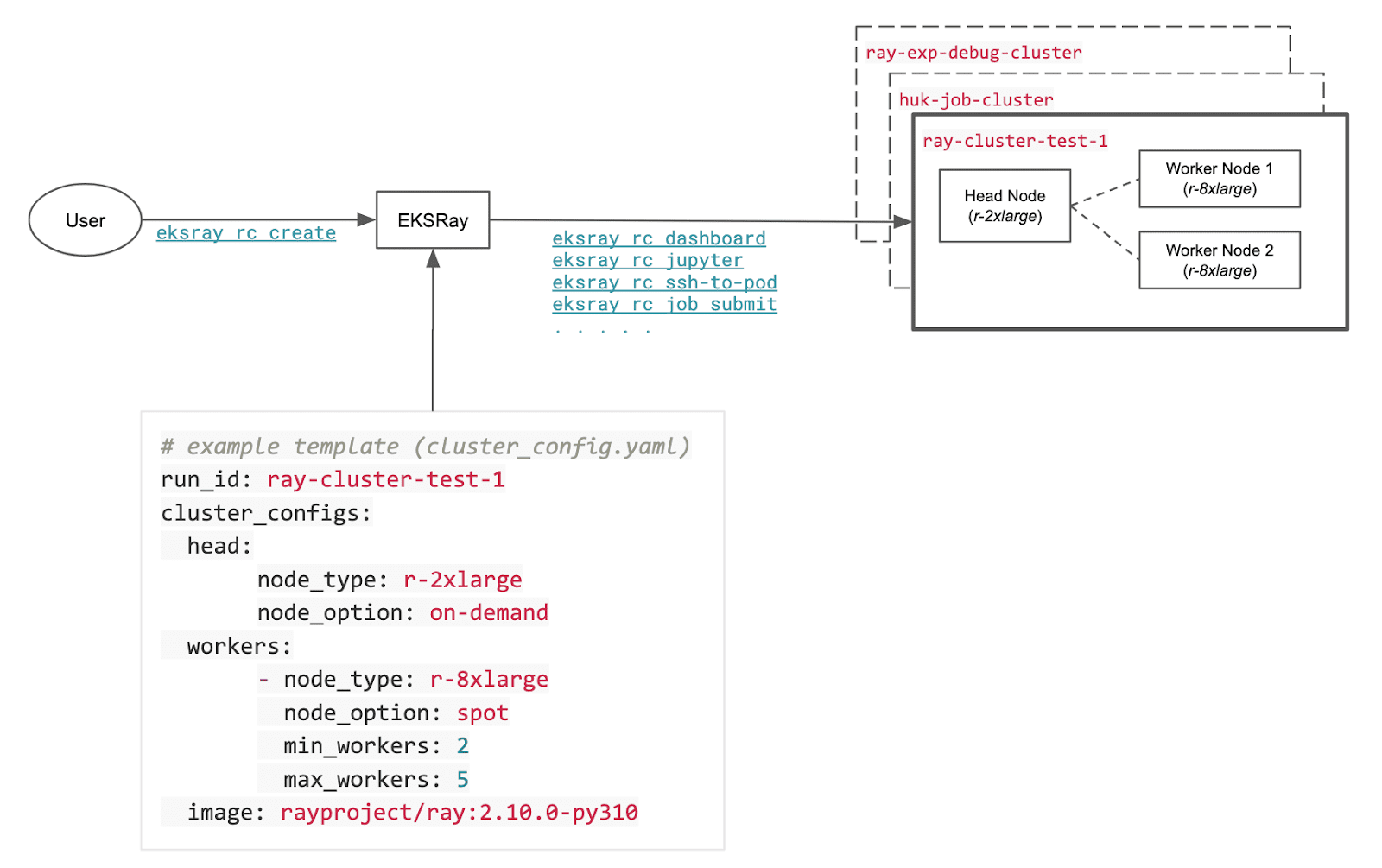

RayClusters (eksray rc)

A RayCluster is a set of machines—consisting of one head node (driver machine) and multiple worker nodes—that communicate with the KubeRay Operator and Ray Autoscaler. This setup gives you:

- A ready-to-use, cross-cluster Ray network upon spawning

- The ability to autoscale worker nodes or reconfigure them dynamically

- A containerized environment loaded with the tools required for development and production

- Pre-integrated connections to the relevant databases and data lakes, aligned with your AWS region and production environment

RayClusters are ideal for everyday development tasks. They let you easily spawn interactive Jupyter notebooks or integrated VS Code environments, access Ray Dashboards, and synchronize data bi-directionally. Perhaps the best part is the cost model: these clusters run on standard EC2 instances, so you only pay for the underlying EC2 resources (no extra license or platform fees). This keeps development costs flexible and competitive compared to other solutions.

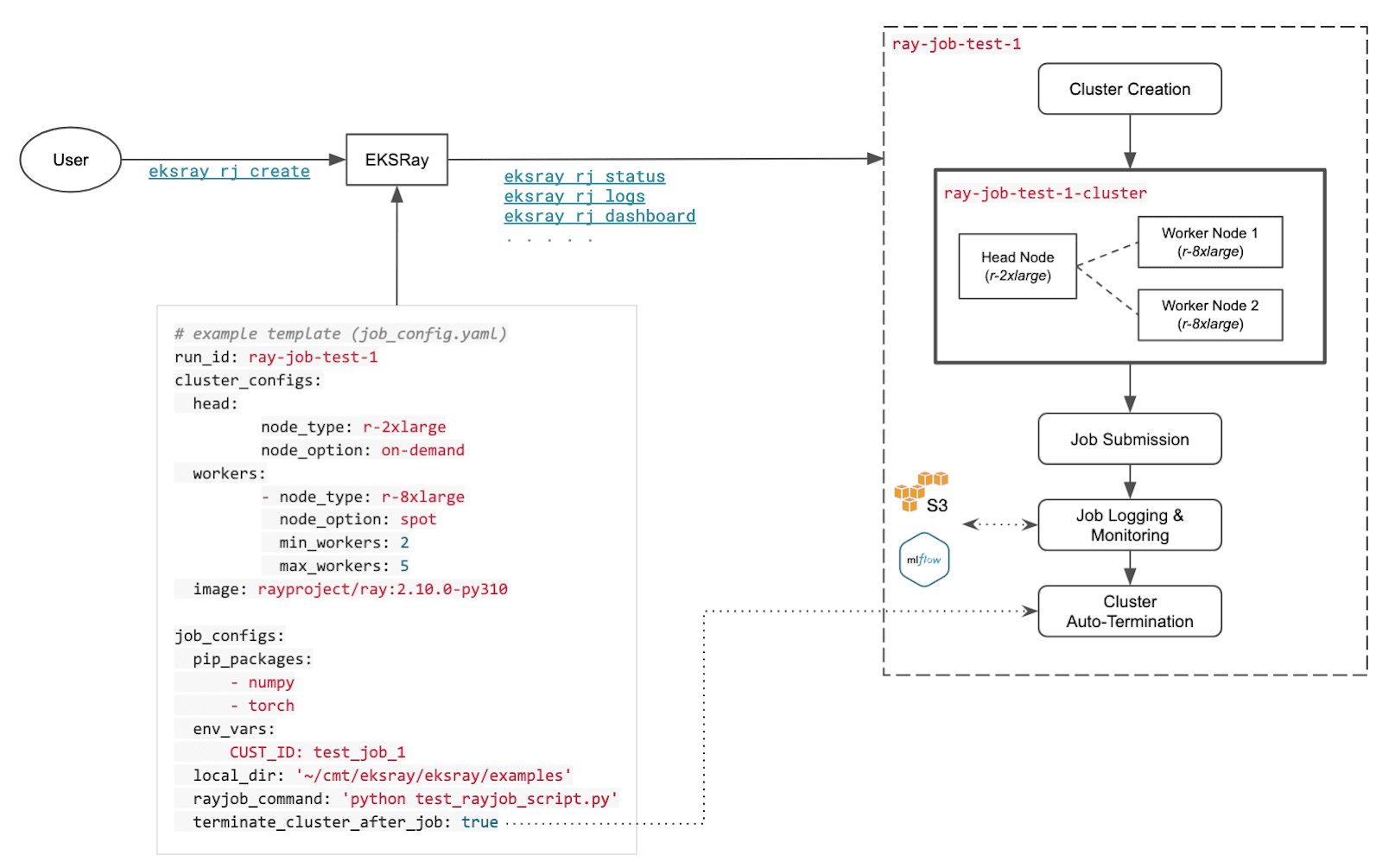

RayJobs (eksray rj)

RayJobs are EKSRay’s automated pipeline feature. Although RayJobs also run on RayClusters in the background, they automate many key steps in the pipeline lifecycle, including:

- Spawning a RayCluster

- Running your job payload as defined by the job script and environment configuration

- Tracking the RayJob’s progress and monitoring cluster resources

- Managing job-level logging and persisting results and outputs

- Automatically terminating the RayCluster once the job is complete

This workflow is central to resource-based parallelism and autoscaling. It allows you to design a pipeline that can handle specialized tasks with custom worker-machine types which can be chained together, resulting in a flexible “per-task worker type” system that orchestrates everything through a single, coherent process. RayJobs are especially well-suited for building end-to-end pipelines for model development and large-scale testing. One of our Telematics-event modelling teams, for example, uses it to run contained ETL, training, and inference workloads with dynamic autoscaling—all while maintaining high resource utilization (often over 95% CPU and GPU utilization) at basic EC2 costs. These capabilities RayJobs support will be central to our discussion in future articles about resource-based parallelism and autoscaling additions.

Conclusion

Data Engineering and Data Science teams regularly encounter significant challenges when scaling traditional data processing platforms to meet the demands of large-scale workloads. Memory overhead, complex cluster configurations, and rising cloud costs quickly turn from minor annoyances into major roadblocks.

Our setup with EKSRay offers a compelling solution by integrating Ray’s powerful distributed computing capabilities with Kubernetes’ flexible resource management. Built atop Amazon EKS, KubeRay, and the EKSRay CLI, this architecture streamlines scalability, minimizes infrastructure overhead, and significantly reduces costs—allowing your team to focus more on innovation and less on infrastructure.

We’ve introduced EKSRay’s key components and capabilities, particularly emphasizing how RayClusters and RayJobs provide flexibility and ease of use, from interactive development to automated job pipelines. However, this is just the foundation.

In Part 2, we’ll dive deeper into EKSRay’s advanced techniques for resource-based parallelism and dynamic autoscaling. We’ll explore Custom Resource Tagging, Placement Groups, and Gang Scheduling, and Single-config Hybrid RayJobs in depth, showcasing practical examples that illustrate precisely how EKSRay manages resources to optimize efficiency and minimize costs.

Stay tuned for the next part, where we dive deeper into how EKSRay delivers this dynamic autoscaling architecture that scales up seamlessly with increasing workloads.